Setting up state-aware orchestration Private previewEnterpriseEnterprise +

Set up state-aware orchestration to automatically determine which models to build by detecting changes in code or data and only building the changed models each time a job is run.

The dbt Fusion Engine is currently available for installation in:

- Local command line interface (CLI) tools Preview

- VS Code and Cursor with the dbt extension Preview

- dbt platform environments Private preview

Join the conversation in our Community Slack channel #dbt-fusion-engine.

Read the Fusion Diaries for the latest updates.

Prerequisites

To use state-aware orchestration, make sure you meet these prerequisites:

- You must have a dbt Enterprise and Enterprise+ accounts and a Developer seat license.

- You have updated the environment that will run state-aware orchestration to the dbt Fusion engine. For more information, refer to Upgrading to dbt Fusion engine.

- You must have a dbt project connected to a data platform.

- You must have access permission to view, create, modify, or run jobs.

- You must set up a deployment environment.

- (Optional) To customize behavior, you have configured your model or source data with advanced configurations.

State-aware orchestration is available for SQL models only. Python models are not supported.

Default settings

By default, for an Enterprise-tier account upgraded to the dbt Fusion engine, any newly created job will automatically be state-aware. Out of the box, without custom configurations, when you run a job, the job will only build models when either the code has changed, or there’s any new data in a source.

Create a job

For existing jobs, make them state-aware by selecting Enable Fusion cost optimization features in the Job settings page.

To create a state-aware job:

- From your deployment environment page, click Create job and select Deploy job.

- Options in the Job settings section:

- Job name: Specify the name, for example,

Daily build. - (Optional) Description: Provide a description of what the job does (for example, what the job consumes and what the job produces).

- Environment: By default, it’s set to the deployment environment you created the state-aware job from.

- Job name: Specify the name, for example,

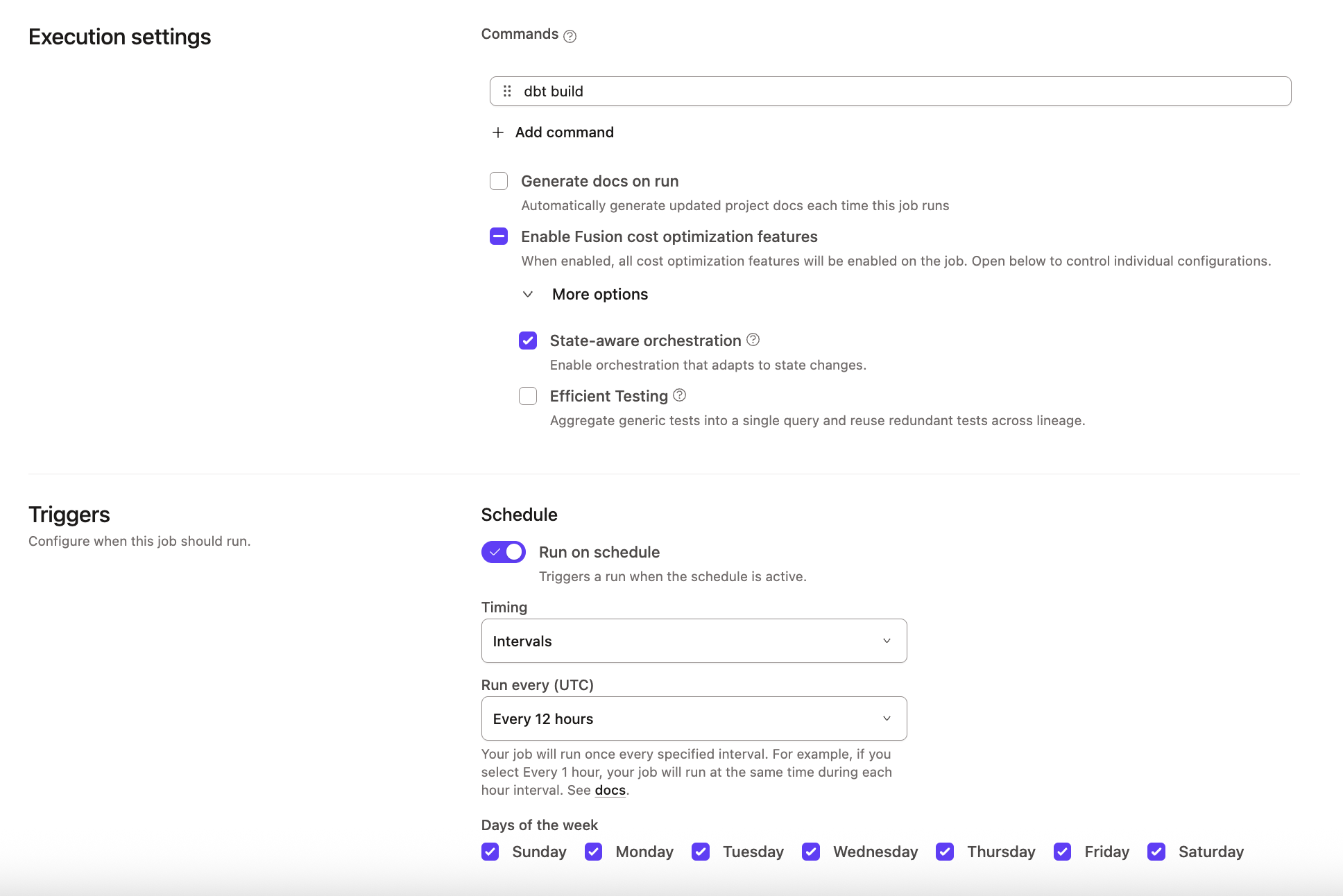

- Options in the Execution settings and Triggers sections:

- Execution settings section:

- Commands: By default, it includes the

dbt buildcommand. Click Add command to add more commands that you want to be invoked when the job runs. - Generate docs on run: Enable this option if you want to generate project docs when this deploy job runs.

- Enable Fusion cost optimization features: Select this option to enable State-aware orchestration. Efficient testing is disabled by default. You can expand More options to enable or disable individual settings.

- Commands: By default, it includes the

- Triggers section:

- Run on schedule: Run the deploy job on a set schedule.

- Timing: Specify whether to schedule the deploy job using Intervals that run the job every specified number of hours, Specific hours that run the job at specific times of day, or Cron schedule that run the job specified using cron syntax.

- Days of the week: By default, it’s set to every day when Intervals or Specific hours is chosen for Timing.

- Run when another job finishes: Run the deploy job when another upstream deploy job completes.

- Project: Specify the parent project that has that upstream deploy job.

- Job: Specify the upstream deploy job.

- Completes on: Select the job run status(es) that will enqueue the deploy job.

- Run on schedule: Run the deploy job on a set schedule.

-

(Optional) Options in the Advanced settings section:

- Environment variables: Define environment variables to customize the behavior of your project when the deploy job runs.

- Target name: Define the target name to customize the behavior of your project when the deploy job runs. Environment variables and target names are often used interchangeably.

- Run timeout: Cancel the deploy job if the run time exceeds the timeout value.

- Compare changes against: By default, it’s set to No deferral. Select either Environment or This Job to let dbt know what it should compare the changes against.

-

Click Save.

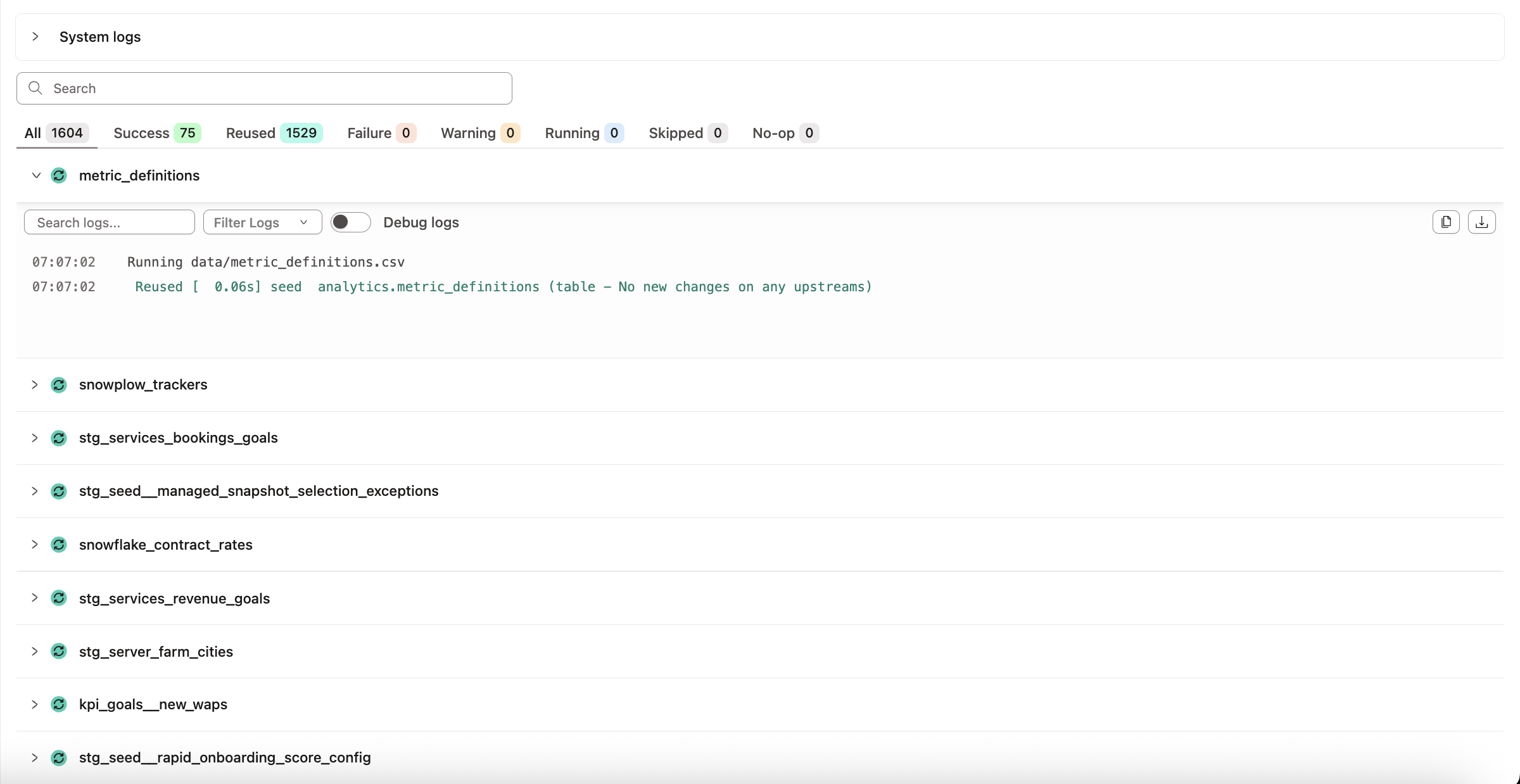

You can see which models dbt builds in the run summary logs. Models that weren't rebuilt during the run are tagged as Reused with context about why dbt skipped rebuilding them (and saving you unnecessary compute!). You can also see the reused models under the Reused tab.

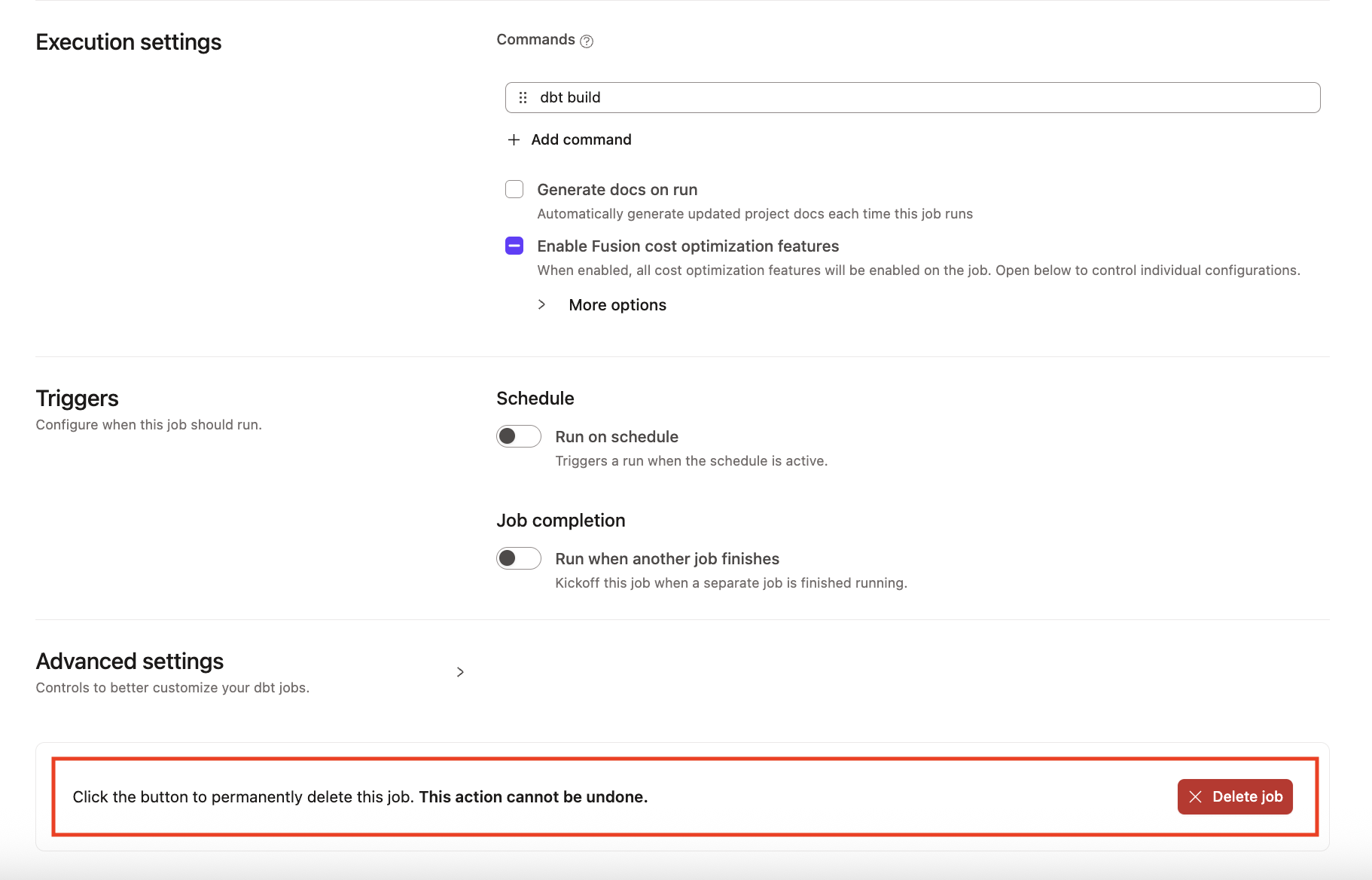

Delete a job

To delete a job or multiple jobs in dbt:

- Click Deploy on the navigation header.

- Click Jobs and select the job you want to delete.

- Click Settings on the top right of the page and then click Edit.

- Scroll to the bottom of the page and click Delete job to delete the job.

- Confirm your action in the pop-up by clicking Confirm delete in the bottom right to delete the job immediately. This action cannot be undone. However, you can create a new job with the same information if the deletion was made in error.

- Refresh the page, and the deleted job should now be gone. If you want to delete multiple jobs, you'll need to perform these steps for each job.

If you're having any issues, feel free to contact us for additional help.

Advanced configurations

By default, we use the warehouse metadata to check if sources (or upstream models in the case of Mesh) are fresh. For more advanced use cases, dbt provides other options that enable you to specify what gets run by state-aware orchestration.

You can customize with:

-

loaded_at_field: Specify a specific column to use from the data. -

loaded_at_query: Define a custom freshness condition in SQL to account for partial loading or streaming data.

If a source is a view in the data warehouse, dbt can’t track updates from the warehouse metadata when the view changes. Without a loaded_at_field or loaded_at_query, dbt treats the source as "always fresh” and emits a warning during freshness checks. To check freshness for sources that are views, add a loaded_at_field or loaded_at_query to your configuration.

You can either define loaded_at_field or loaded_at_query but not both.

You can also customize with:

updates_on: Change the default fromanytoallso it doesn’t build unless all upstreams have fresh data reducing compute even more.build_after: Don’t build a model more often than every x period to reduce build frequency when you need data less often than sources are fresh.

To learn more about model freshness and build after, refer to model freshness config. To learn more about source and upstream model freshness configs, refer to resource freshness config.

Customizing behavior

You can optionally configure state-aware orchestration when you want to fine-tune orchestration behavior for these reasons:

-

Defining source freshness:

By default, dbt uses metadata from the data warehouse. You can instead:

- Specify a custom column and dbt will go to that column in the table instead

- Specify a custom SQL statement to define what freshness means

Not all source freshness is equal — especially with partial ingestion pipelines. You may want to delay a model build until your sources have received a larger volume of data or until a specific time window has passed.

You can define what "fresh" means on a source-by-source basis using a custom freshness query. This lets you:

- Add a time difference to account for late-arriving data

- Delay freshness detection until a threshold is reached (for example, number of records or hours of data)

-

Reducing model build frequency

Some models don’t need to be rebuilt every time their source data is updated. To control this:

- Set a refresh interval on models, folders, or the project to define how often they should be rebuilt at most

- This helps avoid overbuilding and reduces costs by only running what's really needed

-

Changing the default from

anytoallBased on what a model depends on upstream, you may want to wait until all upstream models have been refreshed rather than going as soon as there is any new data.

- Change what orchestration waits on from any to all for models, folders, or the project to wait until all upstream models have new data

- This helps avoid overbuilding and reduces costs by building models once everything has been refreshed

To configure and customize behavior, you can do so in the following places using the

build_afterconfig:dbt_project.ymlat the project level in YAMLmodel/properties.ymlat the model level in YAMLmodel/model.sqlat the model level in SQL These configurations are powerful because you can define a sensible default at the project level or for specific model folders, and override it for individual models or model groups that require more frequent updates.

Example

Let's use an example to illustrate how to customize our project so a model and its parent model are rebuilt only if they haven't been refreshed in the past 4 hours — even if a job runs more frequently than that.

A Jaffle shop has recently expanded globally and wanted to make savings. To reduce spend, they found out about dbt's state-aware orchestration and want to rebuild models only when needed. Maggie — the analytics engineer — wants to configure her dbt jaffle_shop project to only rebuild certain models if they haven't been refreshed in the last 4 hours, even if a job runs more often than that.

To do this, she uses the model freshness config. This config helps state-aware orchestration decide when a model should be rebuilt.

Note that for every freshness config, you're required to set values for both count and period. This applies to all freshness types: freshness.warn_after, freshness.error_after, and freshness.build_after.

Refer to the following samples for using the freshness config in the model file, in the project file, and in the config block of the model.sql file:

- Model YAML

- Project file

- Config block

models:

- name: dim_wizards

config:

freshness:

build_after:

count: 4 # how long to wait before rebuilding

period: hour # unit of time

updates_on: all # only rebuild if all upstream dependencies have new data

- name: dim_worlds

config:

freshness:

build_after:

count: 4

period: hour

updates_on: all

models:

<resource-path>:

+freshness:

build_after:

count: 4

period: hour

updates_on: all

{{

config(

freshness={

"build_after": {

"count": 4,

"period": "hour",

"updates_on": "all"

}

}

)

}}

With this config, dbt:

- Checks if there's new data in the upstream sources

- Checks when

dim_wizardsanddim_worldswere last built

If any new data is available and at least 4 hours have passed, dbt rebuilds the models.

You can override freshness rules set at higher levels in your dbt project. For example, in the project file, you set:

models:

+freshness:

build_after:

count: 4

period: hour

jaffle_shop: # this needs to match your project `name:` in dbt_project.yml

staging:

+materialized: view

marts:

+materialized: table

This configuration means that every model in the project has a build_after of 4 hours. To change this for specific models or groups of models, you could set:

models:

+freshness:

build_after:

count: 4

period: hour

marts: # only applies to models inside the marts folder

+freshness:

build_after:

count: 1

period: hour

If you want to exclude a model from the freshness rule set at a higher level, set freshness: null for that model. With freshness disabled, state-aware orchestration falls back to its default behavior and builds the model whenever there’s an upstream code or data change.

Differences between all and any

-

Since Maggie configured

updates_on: all, this means both models must have new upstream data to trigger a rebuild. If only one model has fresh data and the other doesn't, nothing is built -- which will massively reduce unnecessary compute costs and save time. -

If Maggie wanted these models to rebuild more often (for example, if any upstream source has new data), she would then use

updates_on: anyinstead:

freshness:

build_after:

count: 1

period: hour

updates_on: any

This way, if either dim_wizards or dim_worlds has fresh upstream data and enough time passed, dbt rebuilds the models. This method helps when the need for fresher data outweighs the costs.

Limitation

The following section lists considerations when using state-aware-orchestration:

Deleted tables

If a table was deleted in the warehouse, and neither the model’s code nor the data it depends on has changed, state-aware orchestration does not detect a change and will not rebuild the table. This is because dbt decides what to build based on code and data changes, not by checking whether every table still exists. To build the table, you have the following options:

-

Clear cache and rebuild: Go to Orchestration > Environments and click Clear cache. The next run will rebuild all models from a clean state.

-

Temporarily disable state-aware orchestration: Go to Orchestration > Jobs. Select your job and click Edit. Under Enable Fusion cost optimization features, disable State-aware orchestration and click Save. Run the job to force a full build, then re‑enable the feature after the run.

Related docs

Was this page helpful?

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.